Difference between revisions of "Phasing"

m (typo) | m (→Compute Phase) | ||

| Line 31: | Line 31: | ||

On the exu side are all your standard arithmetic and logic operations. All floating point operations too. And since comparison results are produced explicitly too and are not implicitly routed over global condition codes they are all in there too. | On the exu side are all your standard arithmetic and logic operations. All floating point operations too. And since comparison results are produced explicitly too and are not implicitly routed over global condition codes they are all in there too. | ||

| − | The most important operation to be on the flow side in the compute phase is [[ | + | The most important operation to be on the flow side in the compute phase is [[Instruction_Set/load|load]]. It requires belt operands to compute addresses from, and then schedules the load to retire some time later. Pure address arithmetic without loads is also on the flow side, sharing the same hardware. |

=== Call Phase === | === Call Phase === | ||

Latest revision as of 07:29, 9 June 2015

Mill instructions are potentially very wide on the higher end machines. Much wider even than any of the VLIW designs of the past or current GPUs, over 30 operations per instruction and per cycle.

To utilize such levels of parallelism, the operations within one instruction are executed in different phases, which are executed in consecutive cycles.

Contents

The Phases in Order

Which phase an operation is assigned to depends on how it produces and consumes values. This is because it determines how the functional units implementing the operations need to be wired on the chip.

The order those phases are executed in is determined by data flow: Producers before consumers to maximize instruction level parallelism.

The phasing is almost directly expressed in the instruction Encoding, and the Decode order maps to phase order, too.

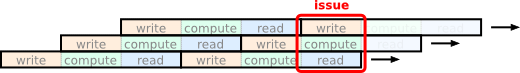

Just as the decode of one instruction is stretched over 3 cycles, the issuing of the operations in an instruction is too. In 3 consecutive instructions, phase 1 operations of instruction 3 are issued in the same cycle as the phase 2 operations of instruction two and the phase 3 operations of instruction 1. And barring stalls this is the steady state in the system, over branches and everything: the operations of 3 different phases from 3 different instructions are issued every cycle.

And while we have only mentioned 3 phases so far, there are actually more, 5 or even 7, depending on how you look at it. The additional phases could be all considered subphases of the compute or writer phase.

Reader Phase

Reader Operations produce values for later consumption and put them on the Belt, but have no dynamic operands that need to be pulled from the belt. Reader operation can occur in both the exu and flow instruction stream. There are no data dependencies in reader operations.

Because the exu stream instruction format is optimized for many small operations with short hardware operands the reader operations that query internal hardware state are placed on the exu side.

The flow side is optimized for large immediates, so larger direct constants are encoded there.

Reader phase operations are all latency 1. They all take one cycle, so they can immediately be consumed by the operations in the next phase. On the exu side, all the values are read from fast registers. And while on the flow side the constants are only available on the second decode cycle, the extracted values can immediately and directly retire onto the belt with no latency, they don't need to be pulled from anywhere. So in either case the values produced by the reader phase are available to the compute phase of the same instruction.

Compute Phase

This is where all the major data manipulation happens. Compute instructions take values from the belt, although most have limited support for small immediates too, and produce new values from them to put on the belt again. Compute operation latency is always defined and fixed for each, this is static scheduling, but can vary significantly. They also can occur in both instruction streams.

On the exu side are all your standard arithmetic and logic operations. All floating point operations too. And since comparison results are produced explicitly too and are not implicitly routed over global condition codes they are all in there too.

The most important operation to be on the flow side in the compute phase is load. It requires belt operands to compute addresses from, and then schedules the load to retire some time later. Pure address arithmetic without loads is also on the flow side, sharing the same hardware.

Call Phase

The call phase is a bit special. Its operations are only available in the flow instruction stream. And from the phasing perspective of the caller they don't require any cycles, and in a sense are an extension of the compute phase. Although they of course do need cycles to execute and in reality take by far the most cycles of all the phases. The call phase operations doen't actually produce any new values, they just rename/reroute existing values to be arguments for the new code block. The new values are then created in that new code block for the later phases.

The call instruction itself takes one cycle. But it puts the data flow within the current frame on hold and executes all the instructions in the called EBB chain, including all the other calls in there. And when there is more than one call in an instruction they are called back to back, chaining into each other.

So while an instruction is oblivious to all the cycles spent in calls, they are by no means free. The only way an instruction might be able to tell is when the results of long latency compute operations are available after the call phase. But this is scheduled.

This obliviousness of the following phases to the cycles used by the calls in the instruction is also what enables the emulation of operations that are not natively implemented in hardware on a core across the whole family of Mill chips. The program semantics are not affected. Even the belt layout from the perspective of the later phase operations is the same. Only the time and resources it takes to produce those values on the belt are different.

Call is actually not the only operation in the call phase. There are several variants of calls, like conditional calls. Also the inner operation for looping belongs in here.

Pick Phase

The pick phase only has the pick and recur operations. They are encoded on the exu side and take 3 Belt parameters. They have zero latency, because they are implemented in the renaming/rerouting of the Crossbar and not in any functional unit. There is no pipeline and no inputs or new outputs. There are dedicated slots though to encode them in.

What the pick phase does, is selecting a value based on a predicate and drop it to the front of the belt. This is the final step before all the data manipulations in the previous phases can be made permanent and become global state in writes. And you make sure you got the correct values.

Writer Phase

The writer operations have input parameters from the belt, but drop no values back on it. As far as the internal data flow is concerned, writer operations are pure sinks.

That is not to say they do nothing. Quite the opposite. They all write belt values into more permanent data sinks to turn local values that have been contained on the belt into global state.

On the flow side there are the store operations for writing into memory. But also spill to save values into the Scratchpad.

On the exu side there is wr for writing into special registers and other special sinks.

The operations that manipulate the security/protection state are also part of the writer phase.

Conform Phase

The conform phase does for the following transfer phase what the pick phase does for writer phase: it reorders the belt values to put them into the position the next operations expect them to be. The return operations can do that themselves via specifying the return values. But normal branches can't do this and don't change the frame. Nevertheless the new code blocks expect values to be in specific belt positions. The conform phase operations make sure that they are, when necessary.

The conform phase takes a cycle, but almost always this can be masked with the writer phase operations working in parallel.

Transfer Phase

This is where code transfers happen, i.e. branches and returns. Both conditional and unconditional. Calls are code transfers too of course, but they are not really changes to the control flow, only longer detours. Branches are were truly different paths are taken.

Issue vs. Retire

It's important to emphasize that phasing determines the order operations issue in within an instruction, not the order they retire in. While a majority of operations only take one cycle, and there the issue order indeed defines the retire order, there are many operations that do not. Putting the operations in the right instruction to order their retire times appropriately to when they need to be available is what Static Scheduling does at compile time.

This difference between issue and retire cycle is also what makes the cycle saving gains of phasing across control flow possible.

The branches of an instruction and the reads of the next instruction issue in the same cycle. Since reads don't depend on Belt operands it is always safe to start decoding and issuing them. When the branch Prediction is correct, that cycle in the readers is saved, when not, the read retires are canceled and there is a stall.

And at the end of a basic block, all the data writes and logically following branches are issued in the same cycle, and no matter which way the branches go, the writes retire.

Theory

The operations a computer performs can always be divided into different categories, depending on what they do. This goes back to the 3 kinds of terms in lambda calculus (variable, abstraction, application) or the equivalent 3 sets of symbols a Turing machine works with (state, alphabet, shift).

Even a one instruction set computer has to perform the 3 tasks in its one instruction, generally as a compute, a compare and a control flow part of the instruction.

In all those cases the reading and writing of data happens implicitly or is described separately of the formalism, but it can go the other way around too. In transport triggered architectures only the reading and writing of data is explicit, and all the other aspects of computation and comparing and control are implicit as side effects.

And there are many many more equivalent theories of computing that all choose to make some of these aspects more explicit and other less so. Pretty much all programming languages and computer architectures are.

What is common to all these approaches is that they define an order, a precedence of how those parts interact. They all define a most basic directed data flow, and the power comes from repeating it over and over.

Rationale

All computer architectures of course have to account for this. But in historical designs the engineering is primarily driven by the physical constraints of the hardware at the gate level. And all computer architectures in common use today are actually historical designs conceived 30-40 years ago. So on the instruction level the logical data flow grouping was more or less ad hoc, wherever the bits and wires fit. The instruction streams are flat and the data and control flows emerge from them ad hoc, too. The hardware had no real structure to work with and expect and be prepared for. That's one of the reasons out of order exists: it looks ahead in the instruction flow and tries to bring all those flat opaque instructions into a better ordered data and control flow for the available hardware. This is what the long pipelines are for: to have a window to find the data flows and then reorder them to best fit the hardware resources.

On the Mill those core data flows and their ordered phases are explicitly encoded in the instructions. Their most basic order is right there, within every instruction. Usually several within every instruction in parallel actually. And stringing them together into an instruction stream funnels the data flow through the available hardware in an almost direct mapping.

In doing so, the usable instruction level parallelism is essentially tripled on average, because all three phases of the most basic data flow can be done in parallel, just phase shifted by one iteration. This happens over control flow barriers, and actually only is beneficial over control flow barriers when compared to VLIW architectures. But control flow is very common, and usually the most severe road block to data flow. It's common enough to happen on almost every Mill instruction. And when there is no control flow, the Mill can exploit all its available width even more freely.

Media

Presentation on Execution and Phasing by Ivan Godard - Slides