Memory

A lot of the power and performance gains of the Mill, but also many of its security improvements over conventional architecures come from the various facilities of the memory management. Most subsystems have their own dedicated pages. This page is an overview.

Contents

Overview

The Mill architecture is a 64bit architecture, there are no 32bit Mills. For this reason it is possible and indeed prudent to adopt a single address space (SAS) memory model. All threads and processes share the same address space. Any address points to the same location for every process. To do this securely and efficiently the memory access protection and address translation have been split into two separate modules, whereas on conventional architectures those two tasks are conflated into one.

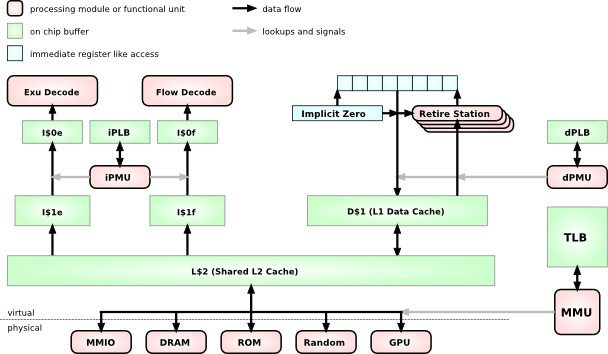

As can be seen from this rough system chart, There is a combined L2 cache, although some low level implementations may choose to omit this for space and energy reasons. The Mill has facilities that make an L2 cache less critical.

L1 caches are separate for instructions and data already, and even more, they are already separate for ExuCore instrucions and FlowCore instructions. Smaller, more specialized caches can be made faster and more efficient in many regards, but chiefly via shorter signal paths.

The D$1 data cache feeds into the retire stations with load operations and recieves the values from the store operations.

Address Translation

Because address translation is separated from access protection, and because all processes share one address space, the translation and TLB accesses can be moved below the caches. In fact the TLB only ever needs to be accessed when there is a cache miss or evict. In that case there is a +300 cycle stall anyway, which means the TLB can be big and flat and slow and energy efficient. The few extra cycles for a TLB lookup are largely masked by the system memory access.

On conventional machines the TLB is right in the critical path between the top level cache and the functional units. This means the TLB must be small and with a complex hierarchy and fast and power hungry. And you still spend up to 20-30% of your cycles and power budget on TLB stalls and TLB hierarchy shuffling.

Reserved Address Space

The top 16th of the address space is reserved to facilitate fast protection domain or turf switches with secure stacks. More on this there.

Retire Stations

Retire stations are the FUs or Slot for the load operation. They implement the load operation. The load operation is explicitly scheduled, i.e. it has a parameter which determines exactly at which point in the future it has to make the value available and drop it on the Belt. This explicit static scheduling allows the hiding of almost all cache latencies in memory access. A DRAM stall will still have the same cost, but due to many innovations in memory access the amount of DRAM accesses has been vastly reduced too.

Another important aspect of this delayed load operation is, that it will not load the value at the point of the issueing of the load operation, but at the point of when it is scheduled to yield the value. This makes the load hardware immune to Aliasing, which means the compiler can stop worrying about aliasing completely and aggressively optimize.

This is achieved by the active retire stations, i.e. the retire stations that have a load pending to return, monitor the store wires for stores on their address. And whenever they see there is a store on their address they just copy the value for later return.

Implicit Zero and Virtual Zero

Loads from uninitialized but accessible memory always yield zero on the Mill. There are two mechanisms to ensure that.

The first is virtual zero. When a load misses the caches and also misses the TLB, it means there have been no stores to the address yet, and in this case the MMU returns zero for the load to bring back to the retire station. The big gain for this is that the OS doesn't have to explicitly zero out new pages, which would be a lot of bandwidth and time, and accesses to uninitialized memory only take the time of the cache and TLB lookups instead of having to do memory round trips.

This also has security benefits, since no one can snoop on memory garbage piles.

An optimization of this for data stacks is the implicit zero. The problems of uninitialzed memory and of bandwidth waste that the virtual zero addresses for general memory accesses are even more compunded for the data stack, because of the high frequency of new accesses and because of the frequency with which recently written data is never used again. On conventional architectures this causes a staggering amount of cache thrashing and superfluous memory accesses.

The stackf instruction allocates a new stack frame, i.e. a number of new cache lines, but it does so just by putting markers for those cache lines into the implicit zero registers.

When a subsequent load happens on a newly allocated stack frame, the hardware knows it is a stack access due to the well known region and stack frame Registers. The hardware doesn't even need to check the dPLB or the top level caches, it just returns zero. So while virtual zero returns zero with only the cost of the cache accesses for uninitialized memory, for the most frequent case of uninitialized stack accesses you don't even have top level cache delays, but immediate access. And of course it also makes it impossible to snoop on old stack frames.

Only when a store happens on a new stack frame will an actual new cache line be allocated, the new value be written and the rest of the cache line be set to zero, all by hardware.

Caches

Media

Presentation on the Memory Hierarchy by Ivan Godard - Slides