Belt

The belt provides the functionality general purpose registers provide in traditional processors as source of operands and destination of results for hardware operations as well as a very short term data store. Depending on Mill family member each belt has 8, 16 or 32 store locations or positions, each of which can be seen as a general purpose register.

There are of course important differences, otherwise there wouldn't be different terminology for it.

Contents

Semantics

First of all, belt positions are read-only. Once a new value is created it cannot be changed. This makes data dependencies trivial to track and as a result Hazards are impossible.

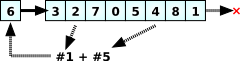

With a limited amount of positions and immutable values this wouldn't be very useful if it wasn't for the fact that when a new value is created and dropped at the front of the belt, the oldest value at the end is discarded, and all other positions are advanced by one step on the belt. This resembles a conveyor belt, hence the name.

Input operands for hardware operations are referred to by those belt position indices. This index is advanced for each value as more values are pushed onto the front. Values are thus not referred to by fixed locations, but relative to the time slot they were inserted at the front. This is called temporal addressing and it is not very friendly to manually keep track of in machine language, but trivial for compilers to do. In fact it can be viewed as a 1:1 mapping of the ubiquitous SSA data flow representation used in most modern compilers, making complicated and sub-optimal register mappings that discard a lot of the information the compiler has obsolete. This means you actually never have to use belt position numbers/register names even in the lowest level assembly equivalent languages. You can always use symbolic names in SSA form. No register renaming, no shuffling values between registers. In fact even if the programming model is of values being moved along a belt, in the implementation no values are actually ever moved, only indices are incremented.

Using and Retaining Values

While new values and operation results are always pushed to the front of the belt, operands can come from any belt position. The compiler takes care to place all produced values close to the consumers of those values, and as such any values needed as operands can almost always be found on the belt. Not always though. It obviously happens more often on shorter belts in less powerful cores. To deal with the rare cases in which you need a value for longer than it is on the belt, a Scratchpad with the spill and fill instructions is provided that stores and loads those needed values to and from a fast hardware buffer managed by the Spiller.

Overall it must be said that values rarely spend more than one cycle on the belt. This is what static scheduling sets out to do after all: optimize data flow. And the belt lengths are chosen to be just the right size to accommodate all the result values that can be produced in one cycle, but not much more.

Multiple Results

Since new values from operation results are always implicitly dropped at the front of the belt, operations don't need to encode destinations for them. It also makes operations that yield multiple results straightforward to implement. Most importantly: the division operations that on all register machines are a well of ugly special case treatment. But it is equally true for the normal call instruction, making multiple result functions native to hardware with no penalty.

Function Calls

A function call creates a new frame. That means it also creates a new belt for that frame. And that belt is pre-seeded with copies of the values from the argument list of the call operation. The callee has no access to the belt of the caller and vice versa. On return, the caller's belt is restored and the returned values are dropped at the front, as with all operation results.

Belt Position Data Format

The required word size of the Mill is 64bits, and that's the minimum size of each store location. High-end machines can hold 128bit values there. But 32bit, 16bit, and 8bit values are just as possible. Only the relevant bits are used, moved, and updated for each type. A belt location isn't limited to a scalar value either. Each can just as well be a 4 element vector SIMD of any byte width base elements.

Metadata

The kind of value a belt location holds, byte width of the base, and vector type is determined by a few bits of metadata attached to each value. This metadata is set on value creation, whether that be via a load instruction or by any other operation. It is preserved in the Scratchpad but discarded again on store.

Hardware operations using belt operands determine the operand type by the metadata and become operations of the specified width or vector operations accordingly without having to encode this information in the instruction stream.

Rationale

Why not just use registers?

Hazards have been mentioned already, which are made impossible by immutable values, i.e. the processor doesn't need to keep track of whether a value is still what it expects it to be for any reason, whether it is just a temporal value, etc. etc. This takes huge effort and comes at great cost, especially on out-of-order machines.

Registers serve two roles on traditional architectures: as source and destination of data, and as temporary storage.

The distinction is not always 100% clear, but generally it can be made along those lines: Values that are only used once are pure data flow usage, values that are used more are data storage. And those usages have been quantified in typical general purpose workloads:

About 80% of values are referenced once, 14% are used twice or more, and 6% are never used at all. [1] Simulations have shown that the single-use use case is essentially completely covered by belt values with proper data flow layout. And about half of the multiple use cases is covered too. Only in about 7% of produced values the Scratchpad needs to get involved. Those figures obviously vary somewhat with belt size and work load, but this is why multiple processor configurations exist: to provide the right resources for the needed workloads.

Another advantage of immutable expiring values is, you don't need rename registers for temporal values, that still need to be addressable by their canonical register name. Rename registers that become necessary in instruction sets with registers are a big source of data shuffling. And since they all must be able to serve as source or destination for values, the cost of interconnecting all those registers with each other and with the different functional units is an exponential explosion of complexity and one of the biggest energy and die space demands in modern conventional CPUs.

The belt drastically reduces the amount of data sources and drains, and the interconnectivity is even more dramatically reduced, all this without sacrificing the number of values available to execution units.

Implementation

It's important to remember that the belt is only a programming model, an interface for the compiler. Internally there are no values being moved around. There is just a bunch of tagged store cells, generally 2x belt length in number, interconnected in a crossbar switch with the functional units and all other consumers and producers. The tags control access and data flow and consist of the Frame ID and the current belt index, that gets incremented whenever a new value is dropped into the frame.

Media

Presentation on the Belt by Ivan Godard - Slides