Difference between revisions of "Encoding"

| Line 17: | Line 17: | ||

Conventionally there are two apporaches to instruction encoding: fixed length instructions and variable length instructions. Fixed length instruction are cheap and easy to decode, but don't offer very good code density. Variable lengths instructions can offer good code density, but decoding them tends to have polynomial cost. The rate of decode in the former tends to be limited by instruction cache size and instruction cache throughput then. For the latter the limiting factor is the cost of of processing and interpreting the the actual bits, of recognizing the instructions in the stream. The best fixed length instruction decoders can scrape low double digits per cycle. And Intel's heroics can get up to 4 instructions per cycle on x86. How to overcome this bottleneck? | Conventionally there are two apporaches to instruction encoding: fixed length instructions and variable length instructions. Fixed length instruction are cheap and easy to decode, but don't offer very good code density. Variable lengths instructions can offer good code density, but decoding them tends to have polynomial cost. The rate of decode in the former tends to be limited by instruction cache size and instruction cache throughput then. For the latter the limiting factor is the cost of of processing and interpreting the the actual bits, of recognizing the instructions in the stream. The best fixed length instruction decoders can scrape low double digits per cycle. And Intel's heroics can get up to 4 instructions per cycle on x86. How to overcome this bottleneck? | ||

| − | It has to be variable length encoding for simple code density reasons. No way around that. But since decoding one stream of instructions n<sup>2</sup> in cost, decoding 2 streams equivalent to the one is a quarter of the cost. And when those two streams are split in their functionality, you get even more gains in code density and simplicity from being to have different meanings of the bit patterns on each side <ref name="split_stream">[http://millcomputing.com/blog/wp-content/uploads/2013/12/mill_cpu_split-stream_encoding.pdf The Mill: Split Stream Encoding]</ref>. | + | It has to be variable length encoding for simple code density reasons. No way around that. But since decoding one stream of instructions n<sup>2</sup> in cost, decoding 2 streams equivalent to the one is a quarter of the cost. And when those two streams are split in their functionality, you get even more gains in code density and simplicity from being able to have different meanings of the bit patterns on each side <ref name="split_stream">[http://millcomputing.com/blog/wp-content/uploads/2013/12/mill_cpu_split-stream_encoding.pdf The Mill: Split Stream Encoding]</ref>. |

| + | |||

| + | == Implementation == | ||

| + | |||

| + | There are of course a few technical hurdles to overcome for split stream encoding. For one, you need two <abbr title="program counter">pc</abbr>s. In practice there are even 3, but more on that under [[Decode]]. How do you specify branch targets with more than one pc? Encoding 2 two addresses in whichever way is not very efficient. You could have implicit base addresses in dedicated memory regions for the two streams and then encode only one offset. Then you run into significant address space waste whenever the two stream are of different length for the same flow of control. | ||

| + | |||

| + | The approach the Mill takes is to only have one address as a branch target, where both program counters end up. But from there one stream walks up the mememory and the other stream walks down. Both sides can be differently long, as needed. So while this branch target logically is the top of an EBB, in memory it actually jumps somewhere in the middle of it. | ||

== Bit Format == | == Bit Format == | ||

Revision as of 04:42, 27 July 2014

The Mill architecure employ a unique split stream instruction encoding that though being wide issue and very dense enables sustained decoding rates of over 30 operations per cycle. As it provides those unparalleled numbers, it does so at the fraction of the energy and transistor cost of mainstream variable instruction length instruction sets like x86.

Contents

Semantics

Extended Basic Block

Code on the Mill is organised into EBBs, i.e. batches of code with one entry point and one or more exit points. There is no implicit fallthrough in EBBs, the instruction flow can only leave them with an explicit branch, which means at least the last operation in every EBB is an unconditional branch. It can contain more conditional branches that either go to the top of other EBBs or to the top of itself. And in contrast to other architectures there can even be calls in the EBB, they don't leave it, as long as they return normally. The image to the right is a purely logical view. The right block is even a normal canonical basic block with one entry point and one exit. In reality things are a little more complicated, as will be seen later here.

Instructions and Operations and Bundles

The unusual encoding makes it necessary make clear distincitons between instructions and operations and bundles that are not really necessary on traditional machines. In the earliest RISC architectures an instruction and an operation and a bundle are usually the same thing: a word size bundle of bits is retrieved from the instruction cache and dropped into the decoder. There one instruction is retrieved and issued resulting in one operation being performed. On wide issue machines one instruction can contain several operations that are all issued together. Modern machines drop a bundle containing several instructions at once into the decoder.

So, a bundle is the batch of memory that gets fetched from memory and dropped into the decoder together.

An instruction is all the operations that get issued to the functional units together.

And an operation is the most basic piece of processing in a functional unit, an add or xor for example.

Split Instruction Streams

Conventionally there are two apporaches to instruction encoding: fixed length instructions and variable length instructions. Fixed length instruction are cheap and easy to decode, but don't offer very good code density. Variable lengths instructions can offer good code density, but decoding them tends to have polynomial cost. The rate of decode in the former tends to be limited by instruction cache size and instruction cache throughput then. For the latter the limiting factor is the cost of of processing and interpreting the the actual bits, of recognizing the instructions in the stream. The best fixed length instruction decoders can scrape low double digits per cycle. And Intel's heroics can get up to 4 instructions per cycle on x86. How to overcome this bottleneck?

It has to be variable length encoding for simple code density reasons. No way around that. But since decoding one stream of instructions n2 in cost, decoding 2 streams equivalent to the one is a quarter of the cost. And when those two streams are split in their functionality, you get even more gains in code density and simplicity from being able to have different meanings of the bit patterns on each side [1].

Implementation

There are of course a few technical hurdles to overcome for split stream encoding. For one, you need two pcs. In practice there are even 3, but more on that under Decode. How do you specify branch targets with more than one pc? Encoding 2 two addresses in whichever way is not very efficient. You could have implicit base addresses in dedicated memory regions for the two streams and then encode only one offset. Then you run into significant address space waste whenever the two stream are of different length for the same flow of control.

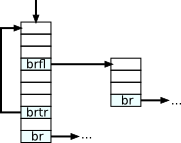

The approach the Mill takes is to only have one address as a branch target, where both program counters end up. But from there one stream walks up the mememory and the other stream walks down. Both sides can be differently long, as needed. So while this branch target logically is the top of an EBB, in memory it actually jumps somewhere in the middle of it.

Bit Format

Rationale

Implementation

See Also

Decode, Phasing, Instruction Set

Media

Presentation on the Encoding by Ivan Godard - Slides