Difference between revisions of "Pipeline"

(chart) | |||

| Line 5: | Line 5: | ||

</div> | </div> | ||

| − | == | + | == Overview == |

| − | + | A slot is a cluster of functional units, like multipliers, adders, shifters, or floating point operations, but also for calls and branches and loads and stores. This grouping generally is along the [[Phasing|phases]] of the operations the functional units implement and it also depends on which [[Decode|decoder]] issues the operations to them. | |

| − | + | All the <abbr title="Functional Unit">FU</abbr>s in a slot pipeline also share the same 2 input channels from the belt, at least for the phases that have inputs from the belt. | |

| − | + | ||

| − | + | ||

| − | + | ||

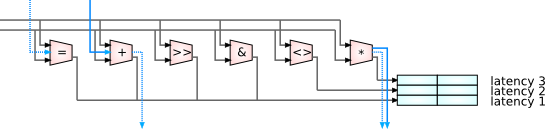

| − | The | + | The different operations grouped into one slot may have different latencies, i.e. they take a different amount of cycles to complete. A each FU in the slot still can be issued one new operation every cycle, because they are fully pipelined. |

| − | + | ||

| − | + | To catch all the results that are issued in different cycles, but may retire in the same cycle, there are latency registers for each latency possible in the pipeline for the values to be written into. They are dedicated to each slot so only that pipeline can write into them. | |

| − | + | === Operands and Results === | |

| + | This is to reduce the number of connecting circuits between all the functional units and registers. Those same latency registers are what on a higher level is visible as [[Belt]] locations. The trick here is that they are addressed differently for writing into them, for creating new values, and for reading them, when they serve as sources. As mentioned above, the registers can only be written by their dedicated slot, they are locally addressed. But they can serve as belt operand globally to all slots. In a similar way global addressing cycles through all the registers for reading, on a per slot basis the local addressing cycles through all the dedicated latency registers for writing. In contrast to the belt addressing this is completely hidden from the outside, it is purely machine internal state. | ||

=== Result Replay === | === Result Replay === | ||

| − | + | There is actually double the amount of latency registers for each pipeline than you would need just to accomodate the produced values from the functional units. This is because while an operation is in flight over several cycles, a call or interrupt can happen, and the frame changes. The operations executing in the new frame need places to store their results, but the operations still running for the old frame do as well. | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | Within a call of course more calls can happen, and then the latency registers aren't enough anymore. And there is other frame specific state too. This is where the [[Spiller]] comes in, it monitors frame transitions, and saves and restores all the frame specific state that might still be needed safely and transparently in the background. And a big part of that is saving the latency register values that would be overwritten in the new frames, and then restoring them on return. | |

| − | + | ||

| − | This | + | This saving of results when the control flow is interrupted or suspended in some way is called result replay. This is in contrast to execution replay on conventional machines, that throw away all transient state in that case, and then restart all, potentially very expensive, computations again from the beginning. |

=== Interaction between Slots === | === Interaction between Slots === | ||

Revision as of 22:39, 11 August 2014

The functional units, organized into pipelines addressable by and referred to as slots from the decoder, are the workhorses, where the computing and data manipulation happens.

Contents

Overview

A slot is a cluster of functional units, like multipliers, adders, shifters, or floating point operations, but also for calls and branches and loads and stores. This grouping generally is along the phases of the operations the functional units implement and it also depends on which decoder issues the operations to them.

All the FUs in a slot pipeline also share the same 2 input channels from the belt, at least for the phases that have inputs from the belt.

The different operations grouped into one slot may have different latencies, i.e. they take a different amount of cycles to complete. A each FU in the slot still can be issued one new operation every cycle, because they are fully pipelined.

To catch all the results that are issued in different cycles, but may retire in the same cycle, there are latency registers for each latency possible in the pipeline for the values to be written into. They are dedicated to each slot so only that pipeline can write into them.

Operands and Results

This is to reduce the number of connecting circuits between all the functional units and registers. Those same latency registers are what on a higher level is visible as Belt locations. The trick here is that they are addressed differently for writing into them, for creating new values, and for reading them, when they serve as sources. As mentioned above, the registers can only be written by their dedicated slot, they are locally addressed. But they can serve as belt operand globally to all slots. In a similar way global addressing cycles through all the registers for reading, on a per slot basis the local addressing cycles through all the dedicated latency registers for writing. In contrast to the belt addressing this is completely hidden from the outside, it is purely machine internal state.

Result Replay

There is actually double the amount of latency registers for each pipeline than you would need just to accomodate the produced values from the functional units. This is because while an operation is in flight over several cycles, a call or interrupt can happen, and the frame changes. The operations executing in the new frame need places to store their results, but the operations still running for the old frame do as well.

Within a call of course more calls can happen, and then the latency registers aren't enough anymore. And there is other frame specific state too. This is where the Spiller comes in, it monitors frame transitions, and saves and restores all the frame specific state that might still be needed safely and transparently in the background. And a big part of that is saving the latency register values that would be overwritten in the new frames, and then restoring them on return.

This saving of results when the control flow is interrupted or suspended in some way is called result replay. This is in contrast to execution replay on conventional machines, that throw away all transient state in that case, and then restart all, potentially very expensive, computations again from the beginning.

Interaction between Slots

The main way slots interact with each other is by exchanging operands over the belt. The results of one operation onto the belt become the operands for the next.

For some types of neighboring slots though, they can pass along operands in the middle of the pipeline to each other. The primary use of this are operand gangs to overcome the severe input operand restrictions for some special case operations.

Those data paths also can be used for the saving of result replay values in case the own latency registers are full, which is a lot faster than going to the spiller right away.

Kinds of Slots

Each slot on a Mill processor is specified to have its own capabilites by having a specific set of functional units in them. This can go so far as every slot pipeline on each chip being unique, but usually this is not the case. The basic reader slots and load and store units tend to be quite uniform, as well as the call slots. The ALU are a little more diverse, depending on the expected workloads the processor is configured for, but still not wildly different. But the Mill architecture certainly is flexible enough to implement special purpose operations and functional units.

Consequently the different blocks in the instructions encode the operations for different kinds of slots. And if the Specification calls for it, every operation slot has its own unique operation encoding. All this is automatically tracked and generated by the Mill Synthesis software stack.