Difference between revisions of "Architecture"

(→Introduction) | |||

| Line 13: | Line 13: | ||

File:Architecture.png|alt=Mill Architecture | File:Architecture.png|alt=Mill Architecture | ||

desc none | desc none | ||

| − | rect | + | rect 455 220 515 470 [[Streamer]] |

| − | rect | + | rect 190 370 315 415 [[Prefetch]] |

| − | rect | + | rect 190 290 315 330 [[Prediction]] |

| − | rect | + | rect 90 260 150 330 [[Protection]] |

| − | rect | + | rect 390 260 460 330 [[Protection]] |

| − | rect | + | rect 0 240 370 290 [[Decode]] |

| − | rect | + | rect 125 150 130 160 [[Metadata]] |

| − | rect | + | rect 125 160 190 180 [[Metadata]] |

| − | rect | + | rect 220 140 380 215 [[Belt]] |

| − | rect | + | rect 110 110 220 200 [[Belt#Belt_Position_Data_Format]] |

| − | rect | + | rect 260 80 380 140 [[ExuCore]] |

| − | rect | + | rect 260 215 380 275 [[FlowCore]] |

| − | rect | + | rect 380 140 460 215 [[Registers]] |

| − | rect | + | rect 515 80 660 110 [[Scratchpad]] |

| − | rect | + | rect 515 150 660 210 [[Spiller]] |

| − | rect 0 | + | rect 0 335 660 515 [[Memory]] |

default [http://millcomputing.com/w/images/3/3e/Architecture.svg] | default [http://millcomputing.com/w/images/3/3e/Architecture.svg] | ||

Revision as of 04:54, 30 July 2014

Introduction

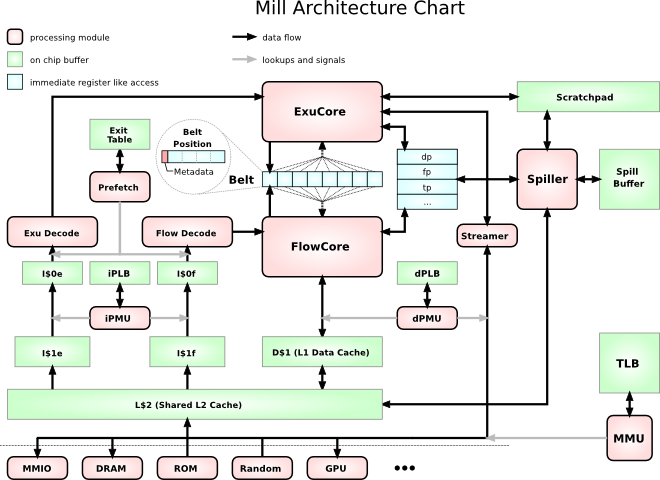

The Mill architecture is a general purpose processor architecture paradigm in the sense that stack machine or a RISC processor is a processor architecture paradigm.

It is also a processor family architecture in the sense that x86 or ARM are processor family architectures.

To briefly classify the Mill architecture: it is a statically scheduled, in order Belt architecture, i.e. like in a DSP all instructions are issued in the order they are present in the binary instruction stream.

This approach traditionally has problems dealing with common general purpose workload operations and flows like branches, particularly while-loop Execution, as well as with hiding Memory access latency. Those problems have been addressed, and so the static scheduling by the Compiler offloads most of the work that had to be done in hardware on every cycle into once at complile time tasks. This is where most power savings and performance gains come from in comparison to traditional general purpose architectures.

Overview

This could be described as the general design philosophy behind the Mill: Remove anything that doesn't directly contribute to computation at runtime from the chip as much as possible, perform those tasks once and optimally in the compiler and use the freed space for more computation units. This results in vastly improved single core performance through more instruction level parallelism as well as more room for more cores.

There are quite a few hurdles for traditional architectures to actually utilize the large amount of instruction level parallelism provided by many ALUs. Some of the most unique and innovative features of the Mill emerged from tackling those hurdles and bottlenecks.

The Belt for example is the result of having to provide many data sources and drains for all those computational units, interconnecting them without tripping over data dependencies and hazards and without having polynomal growth in the cost of space and power for interconnecting.

The unusual split stream, variable length, VLIW Encoding makes the parallel feeding of all those ALUs with instructions possible, in a die space and energy efficient way with optimally computed code density.

Techniques like Phasing, Pipelining and explicitly scheduled load latencies, branch Prediction over several jumps with prefetch and a very short pipeline all minimize the occurence and impact of stalls for unhindered Execution.

Metadata enables safe speculative execution over untaken branches and even exceptions, reducing any stalls even more and increasing parallelism. It also offers tremendous savings in opcode complexity and size.

A new Memory access model with caches fully working on virtual addresses, and the Protection mechanisms uncoupled from adress translation makes you never wait for address translation unless it is masked by DRAM access anyway.