Difference between revisions of "Encoding"

(→Rationale) | |||

| (55 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

| − | The Mill | + | The Mill architecture employs a unique split stream instruction encoding that enables sustained decoding rates of over 30 operations per cycle by being wide issue and very dense. It provides those unparalleled numbers with a fraction of the energy and transistor cost of mainstream variable instruction length instruction sets like x86. |

== Semantics == | == Semantics == | ||

| + | |||

| + | === Wide Issue === | ||

| + | |||

| + | The Mill architecture is wide issue: each instruction contains multiple operations that are issued together. This is not a fixed width as is customary in <abbr title="Very Long Instruction Word">VLIW</abbr> architectures. It can be as narrow or as wide as the instruction level parallelism allows, with the upper bound obviously being the amount of [[Functional Units]]. | ||

=== <span id="EBB">Extended Basic Block</span> === | === <span id="EBB">Extended Basic Block</span> === | ||

[[File:ebb.png|right|alt=EBB overview]] | [[File:ebb.png|right|alt=EBB overview]] | ||

| − | Code on the Mill is | + | Code on the Mill is organized into <abbr title="Extended Basic Block">EBB</abbr>s, i.e. batches of code with one entry point and one or more exit points. There is no implicit fall through in EBBs. The instruction flow can only leave them with an explicit branch, which means at least the last operation in every EBB is an unconditional branch. It can contain more conditional branches that either go to the top of other EBBs or to the top of itself. And in contrast to other architectures, there can even be calls in the EBB. They don't leave it, as long as they return normally. The image to the right is a purely logical view. The right block is even a normal canonical basic block with one entry point and one exit. In reality things are a little more complicated, as will be seen later here. |

=== <span id="instructions">Instructions and Operations and Bundles</span> === | === <span id="instructions">Instructions and Operations and Bundles</span> === | ||

| − | The unusual encoding | + | The unusual encoding requires making clear distinctions between instructions and operations and bundles that are not really necessary on traditional machines. In the earliest <abbr title="Reduced Instructions Set Computer">RISC</abbr> architectures, an instruction and an operation and a bundle are usually the same thing: a word size bundle of bits is retrieved from the instruction cache and dropped into the decoder. There, one instruction is retrieved and issued resulting in one operation being performed. On wide issue machines, one instruction can contain several operations that are all issued together. Modern machines drop a bundle containing several instructions at once into the decoder. |

So, a bundle is the batch of memory that gets fetched from memory and dropped into the decoder together.<br /> | So, a bundle is the batch of memory that gets fetched from memory and dropped into the decoder together.<br /> | ||

An instruction is all the operations that get issued to the functional units together.<br /> | An instruction is all the operations that get issued to the functional units together.<br /> | ||

| − | And an operation is the most basic piece of processing in a functional unit | + | And an operation is the most basic piece of processing in a functional unit (an add or xor for example). |

=== <span id="streams">Split Instruction Streams</span> === | === <span id="streams">Split Instruction Streams</span> === | ||

| + | Conventionally there are two approaches to instruction encoding: fixed length instructions and variable length instructions. Fixed length instruction are cheap and easy to decode, but don't offer very good code density. Variable lengths instructions can offer good code density, but decoding them tends to have polynomial cost. The rate of decode in the former tends to be limited by instruction cache size and instruction cache throughput then. For the latter, the limiting factor is the cost of of processing and interpreting the the actual bits, of recognizing the instructions in the stream. The best fixed length instruction decoders can scrape low double digits per cycle. And Intel's heroics can get up to 4 instructions per cycle on x86. How to overcome this bottleneck? | ||

| − | = | + | It has to be variable length encoding for simple code density reasons. No way around that. But since decoding one stream of instructions is n<sup>2</sup> in cost, decoding 2 streams equivalent to the one is a quarter of the cost. And when those two streams are split in their functionality, you get even more gains in code density and simplicity from being able to have different meanings of the bit patterns on each side <ref name="split_stream">[http://millcomputing.com/blog/wp-content/uploads/2013/12/mill_cpu_split-stream_encoding.pdf The Mill: Split Stream Encoding]</ref>. |

| − | + | The functional split on the Mill for the two different streams is into: | |

| + | * an [[ExuCore]], for all arithmetic and logic, actual computation | ||

| + | * a [[FlowCore]], for flow control and memory accesses | ||

| + | This gives a fairly even split in the average workload for both sides. | ||

== Implementation == | == Implementation == | ||

| + | |||

| + | There are of course a few technical hurdles to overcome for split stream encoding. For one, this requires two <abbr title="program counter">pc</abbr>s. How are branch targets specified with more than one pc? Encoding two addresses in whichever way is not very efficient. There could be implicit base addresses in dedicated memory regions for the two streams and only one offset encoded. Then, significant address space waste occurs whenever the two streams are of different lengths for the same flow of control. | ||

| + | |||

| + | The approach the Mill takes is to only have one address as a branch target, where both program counters end up. From there, one stream walks up the memory and the other stream walks down. Both sides can be of different lengths as needed. So while this branch target logically is the top of an EBB, in memory it actually jumps somewhere in the middle of it. | ||

| + | |||

| + | <br /> | ||

| + | <imagemap> | ||

| + | File:Ebb-memory-layout.png|center | ||

| + | desc none | ||

| + | rect 0 20 180 60 [[Decode#Flow_Stream]] | ||

| + | rect 188 20 442 60 [[Decode#Exu_Stream]] | ||

| + | rect 452 20 564 60 [[Decode#Flow_Stream]] | ||

| + | rect 564 20 668 60 [[Decode#Exu_Stream]] | ||

| + | </imagemap> | ||

| + | |||

| + | The increasingly dark blue bars to each side of the entry points to the EBB are the instructions. The same shade of blue for two instructions on each side means they are pulled from the cache and dropped in their respective decoder together, the [[Flow]] instructions from the decreasing program counter side, the [[Execution]] instructions from the increasing side.<br /> Each instruction is a half-bundle, which together with the instruction on the other side makes a full bundle. Both instructions belong logically together and are issued together. The info byte contains information on how many and which cache lines to pull for the EBB. | ||

| + | |||

| + | It should be noted that because of the amount of operations that can be packed into one instruction and the [[Phasing|Phased]] execution, the vast majority of EBBs on the Mill only consist of one exu and one flow instruction. | ||

| + | |||

| + | Also, each instruction has a different size. While those full instruction sizes are always full byte sizes, there is also always a minimum size of how many bytes an instruction has. This depends on the processor [[Specification]], in particular how many [[Slot]]s and functional units and [[Belt]] positions this specific Mill processor has. | ||

| + | |||

| + | But it is still a variable length [http://en.wikipedia.org/wiki/Very_long_instruction_word VLIW] instruction set, and those normally are very hard to parse. | ||

| + | |||

| + | == General Instruction Format == | ||

| + | |||

| + | The reason this is not the case on the Mill is the instruction format. This format is different on both of the instruction streams on each side, but both follow a general pattern and idea mirrored in orientation on each side, which is described here. | ||

| + | |||

| + | Each instruction is decoded in 3 cycles, each cycle looking at different blocks of the instruction with dedicated hardware. As soon as one block is dealt with for one instruction the corresponding block of the next instruction immediately follows. Those blocks roughly correspond to the different [[Phasing|Phases]]. In particular the first block is dominated by reader phase operations in the exu stream.<br /> | ||

| + | Although all instructions are of different lengths, the decoder knows where to find the next instruction because each instruction has a fixed length header. The header contains a shift count which tells the decoder how long this specific instruction is and where the next instruction starts. And in the case of a branch, it is the [[Prediction|prediction mechanisms]] that determine the instruction pointer in prefetch so it is available without delay. | ||

| + | |||

| + | This fixed header also contains the amount of operations/size information for each block, and it goes through the instruction like this: | ||

| + | |||

| + | # Cycle looks at the header and block 1 immediately following the fixed header. The decoder always knows where it is, because it is a fixed location and immediately starts decoding it assuming maximum possible size of the block. In the next cycle it knows how big it actually is, because the header has been parsed too and can cancel all the excess. | ||

| + | # Cycle looks at block 2, which is aligned to the end of the instruction and block 3 immediately following block 1. This is now possible because all size information, for the individual blocks and for the instruction itself, have been retrieved from the header in the previous cycle. | ||

| + | # Cycle finishes up with all the information retrieved from all blocks. | ||

| + | |||

| + | <br /> | ||

| + | [[File:General-instruction.png|center]] | ||

| + | <br /> | ||

| + | |||

| + | Each block can only contain operations or data that belong to it and has its own format and dedicated decoding hardware. Because of this specialization in each block, each of those formats is simple and dense and the specialized decoders can be fast and cheap. | ||

| + | |||

| + | This process of decoding isn't limited to 3 blocks and cycles. The general pattern of decoding the head and block 1 on the first cycle and 2 more blocks on each consecutive cycle can be expanded to arbitrary sizes. However, it turns out 3 cycles is enough and meshes well with [[Phasing]]. This method could be considered another doubling of the instruction streams. | ||

| + | |||

| + | The blocks are not limited to byte sizes and boundaries. They contain a number of fixed size fragments or operations which usually don't add up to a complete block size. This means almost always there is an alignment hole in the middle of an instruction where blocks 2 and 3 meet. This space contains a delay count that serves as a <abbr title="No Operation">NOP</abbr> of the given length for the instruction of the opposing side. The alignment holes on flow encode the delays on execute and vice versa. There are also normal NOPs, but these are rarely necessary.<br /> | ||

| + | This kind of efficient NOP is very important on wide issue machines, and it is also very important in statically scheduled exposed pipeline architectures. The operations in the instruction can have different latencies, and this delay serves to statically sync with the other stream. | ||

| + | |||

| + | In contrast to conventional architectures, especially RISC architectures, on the Mill, program counters or instruction pointers are always explicitly set or altered with values from the instruction stream or operands. There is no implicit increment. On other processors this only happens with branch instructions. | ||

| + | |||

| + | == Rationale == | ||

| + | |||

| + | The need to be statically scheduled wide issue and to be able to decode more instructions or operations per cycle has been apparent for quite a while. In the [http://en.wikipedia.org/wiki/Digital_signal_processor DSP] world, this has been standard practice for decades. The problem with this is the many and unpredictable branches in general purpose workloads, which make static scheduling very difficult and punish it severely with stalls whenever static scheduling fails. | ||

| + | |||

| + | Additionally, wide issue machines with many parallel operations in one instruction are very inefficient in code size when instruction level parallelism is limited, as it tends to be in general purpose workloads with small current data flow windows. | ||

| + | |||

| + | There are two factors that offer a way out of this: | ||

| + | |||

| + | # Compilers have unlimited data flow windows and can statically and optimally schedule everything if the instruction set provides those interfaces. | ||

| + | # ~80% of all code is in loops and statically scheduled loops have unbounded instruction level parallelism. | ||

| + | |||

| + | The problem of statically scheduling most loops has been [[Execution#Loops|solved]] for the Mill as well. When this issue is solved, performance mainly becomes a problem of how many functional units you have and how fast you can feed them with instructions and data. | ||

| + | |||

| + | A fixed width wide issue instruction set isn't ideal because general purpose workloads are far too irregular to warrant that. When the control and data flow allows it, the option to execute all of the functional units in parallel is desired. | ||

| + | |||

| + | And of course you want different processors for different workloads, even if it is general purpose code. Office desktops, game consoles, industrial controllers, and everything in between all have vastly different requirements. They need different kinds and numbers of functional units, cache sizes, bandwidths etc. It is impossible for one architecture and one instruction set, much less one processor, to serve all those roles equally well. Software compatibility between all of them is highly beneficial, often even necessary. | ||

| + | |||

| + | There are other advantages to be gained from split instruction streams and shifted blocks. Certain memory regions in relative position to each other can only contain certain content. This is an effective way to increase entropy and code density. | ||

| + | |||

| + | Furthermore, this specialization allows you to specialize and dedicate caches. This is only relevant for the Flow and Exu streams, not for the instruction part shifts, which are already in the decoder. What dedicated and split caches allow, apart from packing them much more tightly, is the ability to make them smaller and faster by vastly reducing the wire lengths from the bottom of the cache to the decoder with comparable combined capacity. | ||

| + | |||

| + | This is actually a trend that can be seen all over the Mill architecture: smaller and more specialized caches are more efficient and faster and can be placed better on the chip than fewer, large, non-specialized ones. Division of labor works for machines, too. | ||

== See Also == | == See Also == | ||

| Line 29: | Line 108: | ||

== Media == | == Media == | ||

[http://www.youtube.com/watch?v=LgLNyMAi-0I Presentation on the Encoding by Ivan Godard] - [http://millcomputing.com/blog/wp-content/uploads/2013/12/2013-07-11_mill_cpu_encoding_08.pptx Slides] | [http://www.youtube.com/watch?v=LgLNyMAi-0I Presentation on the Encoding by Ivan Godard] - [http://millcomputing.com/blog/wp-content/uploads/2013/12/2013-07-11_mill_cpu_encoding_08.pptx Slides] | ||

| + | |||

| + | == References == | ||

| + | <references /> | ||

Latest revision as of 04:31, 8 December 2014

The Mill architecture employs a unique split stream instruction encoding that enables sustained decoding rates of over 30 operations per cycle by being wide issue and very dense. It provides those unparalleled numbers with a fraction of the energy and transistor cost of mainstream variable instruction length instruction sets like x86.

Contents

Semantics

Wide Issue

The Mill architecture is wide issue: each instruction contains multiple operations that are issued together. This is not a fixed width as is customary in VLIW architectures. It can be as narrow or as wide as the instruction level parallelism allows, with the upper bound obviously being the amount of Functional Units.

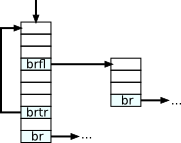

Extended Basic Block

Code on the Mill is organized into EBBs, i.e. batches of code with one entry point and one or more exit points. There is no implicit fall through in EBBs. The instruction flow can only leave them with an explicit branch, which means at least the last operation in every EBB is an unconditional branch. It can contain more conditional branches that either go to the top of other EBBs or to the top of itself. And in contrast to other architectures, there can even be calls in the EBB. They don't leave it, as long as they return normally. The image to the right is a purely logical view. The right block is even a normal canonical basic block with one entry point and one exit. In reality things are a little more complicated, as will be seen later here.

Instructions and Operations and Bundles

The unusual encoding requires making clear distinctions between instructions and operations and bundles that are not really necessary on traditional machines. In the earliest RISC architectures, an instruction and an operation and a bundle are usually the same thing: a word size bundle of bits is retrieved from the instruction cache and dropped into the decoder. There, one instruction is retrieved and issued resulting in one operation being performed. On wide issue machines, one instruction can contain several operations that are all issued together. Modern machines drop a bundle containing several instructions at once into the decoder.

So, a bundle is the batch of memory that gets fetched from memory and dropped into the decoder together.

An instruction is all the operations that get issued to the functional units together.

And an operation is the most basic piece of processing in a functional unit (an add or xor for example).

Split Instruction Streams

Conventionally there are two approaches to instruction encoding: fixed length instructions and variable length instructions. Fixed length instruction are cheap and easy to decode, but don't offer very good code density. Variable lengths instructions can offer good code density, but decoding them tends to have polynomial cost. The rate of decode in the former tends to be limited by instruction cache size and instruction cache throughput then. For the latter, the limiting factor is the cost of of processing and interpreting the the actual bits, of recognizing the instructions in the stream. The best fixed length instruction decoders can scrape low double digits per cycle. And Intel's heroics can get up to 4 instructions per cycle on x86. How to overcome this bottleneck?

It has to be variable length encoding for simple code density reasons. No way around that. But since decoding one stream of instructions is n2 in cost, decoding 2 streams equivalent to the one is a quarter of the cost. And when those two streams are split in their functionality, you get even more gains in code density and simplicity from being able to have different meanings of the bit patterns on each side [1].

The functional split on the Mill for the two different streams is into:

- an ExuCore, for all arithmetic and logic, actual computation

- a FlowCore, for flow control and memory accesses

This gives a fairly even split in the average workload for both sides.

Implementation

There are of course a few technical hurdles to overcome for split stream encoding. For one, this requires two pcs. How are branch targets specified with more than one pc? Encoding two addresses in whichever way is not very efficient. There could be implicit base addresses in dedicated memory regions for the two streams and only one offset encoded. Then, significant address space waste occurs whenever the two streams are of different lengths for the same flow of control.

The approach the Mill takes is to only have one address as a branch target, where both program counters end up. From there, one stream walks up the memory and the other stream walks down. Both sides can be of different lengths as needed. So while this branch target logically is the top of an EBB, in memory it actually jumps somewhere in the middle of it.

The increasingly dark blue bars to each side of the entry points to the EBB are the instructions. The same shade of blue for two instructions on each side means they are pulled from the cache and dropped in their respective decoder together, the Flow instructions from the decreasing program counter side, the Execution instructions from the increasing side.

Each instruction is a half-bundle, which together with the instruction on the other side makes a full bundle. Both instructions belong logically together and are issued together. The info byte contains information on how many and which cache lines to pull for the EBB.

It should be noted that because of the amount of operations that can be packed into one instruction and the Phased execution, the vast majority of EBBs on the Mill only consist of one exu and one flow instruction.

Also, each instruction has a different size. While those full instruction sizes are always full byte sizes, there is also always a minimum size of how many bytes an instruction has. This depends on the processor Specification, in particular how many Slots and functional units and Belt positions this specific Mill processor has.

But it is still a variable length VLIW instruction set, and those normally are very hard to parse.

General Instruction Format

The reason this is not the case on the Mill is the instruction format. This format is different on both of the instruction streams on each side, but both follow a general pattern and idea mirrored in orientation on each side, which is described here.

Each instruction is decoded in 3 cycles, each cycle looking at different blocks of the instruction with dedicated hardware. As soon as one block is dealt with for one instruction the corresponding block of the next instruction immediately follows. Those blocks roughly correspond to the different Phases. In particular the first block is dominated by reader phase operations in the exu stream.

Although all instructions are of different lengths, the decoder knows where to find the next instruction because each instruction has a fixed length header. The header contains a shift count which tells the decoder how long this specific instruction is and where the next instruction starts. And in the case of a branch, it is the prediction mechanisms that determine the instruction pointer in prefetch so it is available without delay.

This fixed header also contains the amount of operations/size information for each block, and it goes through the instruction like this:

- Cycle looks at the header and block 1 immediately following the fixed header. The decoder always knows where it is, because it is a fixed location and immediately starts decoding it assuming maximum possible size of the block. In the next cycle it knows how big it actually is, because the header has been parsed too and can cancel all the excess.

- Cycle looks at block 2, which is aligned to the end of the instruction and block 3 immediately following block 1. This is now possible because all size information, for the individual blocks and for the instruction itself, have been retrieved from the header in the previous cycle.

- Cycle finishes up with all the information retrieved from all blocks.

Each block can only contain operations or data that belong to it and has its own format and dedicated decoding hardware. Because of this specialization in each block, each of those formats is simple and dense and the specialized decoders can be fast and cheap.

This process of decoding isn't limited to 3 blocks and cycles. The general pattern of decoding the head and block 1 on the first cycle and 2 more blocks on each consecutive cycle can be expanded to arbitrary sizes. However, it turns out 3 cycles is enough and meshes well with Phasing. This method could be considered another doubling of the instruction streams.

The blocks are not limited to byte sizes and boundaries. They contain a number of fixed size fragments or operations which usually don't add up to a complete block size. This means almost always there is an alignment hole in the middle of an instruction where blocks 2 and 3 meet. This space contains a delay count that serves as a NOP of the given length for the instruction of the opposing side. The alignment holes on flow encode the delays on execute and vice versa. There are also normal NOPs, but these are rarely necessary.

This kind of efficient NOP is very important on wide issue machines, and it is also very important in statically scheduled exposed pipeline architectures. The operations in the instruction can have different latencies, and this delay serves to statically sync with the other stream.

In contrast to conventional architectures, especially RISC architectures, on the Mill, program counters or instruction pointers are always explicitly set or altered with values from the instruction stream or operands. There is no implicit increment. On other processors this only happens with branch instructions.

Rationale

The need to be statically scheduled wide issue and to be able to decode more instructions or operations per cycle has been apparent for quite a while. In the DSP world, this has been standard practice for decades. The problem with this is the many and unpredictable branches in general purpose workloads, which make static scheduling very difficult and punish it severely with stalls whenever static scheduling fails.

Additionally, wide issue machines with many parallel operations in one instruction are very inefficient in code size when instruction level parallelism is limited, as it tends to be in general purpose workloads with small current data flow windows.

There are two factors that offer a way out of this:

- Compilers have unlimited data flow windows and can statically and optimally schedule everything if the instruction set provides those interfaces.

- ~80% of all code is in loops and statically scheduled loops have unbounded instruction level parallelism.

The problem of statically scheduling most loops has been solved for the Mill as well. When this issue is solved, performance mainly becomes a problem of how many functional units you have and how fast you can feed them with instructions and data.

A fixed width wide issue instruction set isn't ideal because general purpose workloads are far too irregular to warrant that. When the control and data flow allows it, the option to execute all of the functional units in parallel is desired.

And of course you want different processors for different workloads, even if it is general purpose code. Office desktops, game consoles, industrial controllers, and everything in between all have vastly different requirements. They need different kinds and numbers of functional units, cache sizes, bandwidths etc. It is impossible for one architecture and one instruction set, much less one processor, to serve all those roles equally well. Software compatibility between all of them is highly beneficial, often even necessary.

There are other advantages to be gained from split instruction streams and shifted blocks. Certain memory regions in relative position to each other can only contain certain content. This is an effective way to increase entropy and code density.

Furthermore, this specialization allows you to specialize and dedicate caches. This is only relevant for the Flow and Exu streams, not for the instruction part shifts, which are already in the decoder. What dedicated and split caches allow, apart from packing them much more tightly, is the ability to make them smaller and faster by vastly reducing the wire lengths from the bottom of the cache to the decoder with comparable combined capacity.

This is actually a trend that can be seen all over the Mill architecture: smaller and more specialized caches are more efficient and faster and can be placed better on the chip than fewer, large, non-specialized ones. Division of labor works for machines, too.

See Also

Decode, Phasing, Instruction Set

Media

Presentation on the Encoding by Ivan Godard - Slides